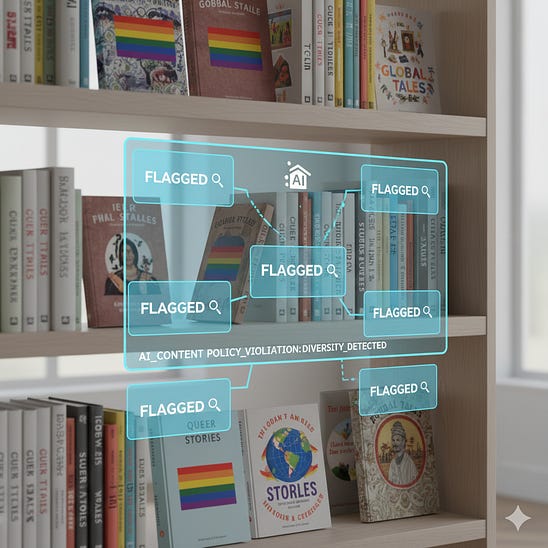

Stay Ahead with AI in Libraries & Education – Insights, Tools, & Trends! The Most Dangerous AI Tool for Libraries YetHow Class-Shelf Plus v3 quietly turns censorship into an automated workflow and why every librarian should be alarmed.

Special Edition: Every Librarian Needs to Read ThisAI, Censorship, and the Alarming Rise of Automated Book FlaggingA few weeks ago, I wrote about how AI is being used in book censorship. I want to let you know about a new investigation that every librarian, educator, and district leader needs to read. Jason Koebler’s article, “AI Is Supercharging the War on Libraries, Education, and Human Knowledge”, exposes how CLCD’s new product, Class-Shelf Plus v3, is being positioned as an AI solution for “managing” classroom and school collections. Here is the article: And here is the vendor’s press release: As someone who has experienced censorship, has lost a job because of it, and writes extensively about AI in education and libraries, this trend is deeply concerning. AI tools are now being built and marketed in ways that speed up the very processes used to remove books. We need to be clear about what that means. When one person’s judgment becomes an algorithmWhat is considered “questionable” to one person may not be to another. Librarians understand this. Our work is grounded in professional review, community standards, developmentally appropriate practice, and a commitment to intellectual freedom. AI systems do not understand nuance. And right now, those criteria are being shaped by political pressure. When you tell an algorithm to flag titles for “sensitive content” or “potentially controversial themes” in today’s climate, it will overwhelmingly identify books about LGBTQ lives, race, immigration, and honest history. That is not speculation. It is the predictable outcome of building a screening tool inside an environment where those topics are already under attack. Once the criteria exist, the model applies them at scale with no context and no understanding of student needs. A book becomes a pattern match. A life experience becomes a risk signal. “It’s going to be used to remove books.”The article includes an interview with Jaime Taylor, discovery and resource management systems coordinator for the W. E. B. Du Bois Library at UMass Amherst. Her warning is one that every librarian should take seriously:

Her point is exactly right. Even if an AI system produces inaccurate results, the risk is that someone under pressure or short on time will accept them as legitimate. And if the tool produces accurate results based on biased criteria, the outcome is simply more efficient censorship. Why this moment should concern all of usClass-Shelf Plus v3 is being sold as an efficiency tool, with claims that it can reduce manual review workloads by “more than 80 percent.” That framing ignores the bigger issue. When AI provides the list, the burden shifts to the human to prove why a book should stay. That is the reverse of how ethical collection development works. This is especially dangerous for students who already see their identities challenged in public discourse. When the same stories that are targeted ideologically also become the ones flagged algorithmically, the result is not neutrality. It is amplified harm. My concern, as both a librarian and someone who writes about AIThis work is personal. I have lived through censorship efforts. I have been the target of organized pressure campaigns. I have lost a job for defending students’ right to read. And I also spend a great deal of time studying AI’s role in education. From that vantage point, tools like Class-Shelf Plus v3 are not just concerning. They are a direct threat to the foundational values of our profession. They turn political anxieties into automated workflows. They normalize the idea that librarians should follow an algorithm rather than exercise professional judgment. They frame removal as compliance, not censorship. We need to say this plainly. AI systems built to “flag” books are not neutral. A call to actionRead the article. Share it with colleagues. Ask your district if they are considering tools like Class-Shelf Plus v3. Push for policy before procurement, and insist on transparency and safeguards when AI intersects with collection development. Above all, do not let automated systems replace the careful, human work that librarians do every day. Our students deserve better than an algorithm that decides which stories are safe enough for them to read. Thank you so much for subscribing and supporting me! 📘 The AI For Educators Series Written by Elissa Malespina—now available in eBook and paperback!

Portions of this newsletter are enhanced using AI-powered tools, with human review ensuring accuracy and quality. © 2025 Elissa Malespina |

-----

Jenny Lussier

Library Media Specialist

Brewster Elementary School

Visit us at: Brewster website